I worked on a containerized directory application to get familiar with Amazon EKS and Kubernetes on AWS. The project involved building Docker images, pushing them to Amazon ECR, configuring IAM policies, and applying Kubernetes manifests to deploy backend and frontend services on EKS.

Architecture Overview

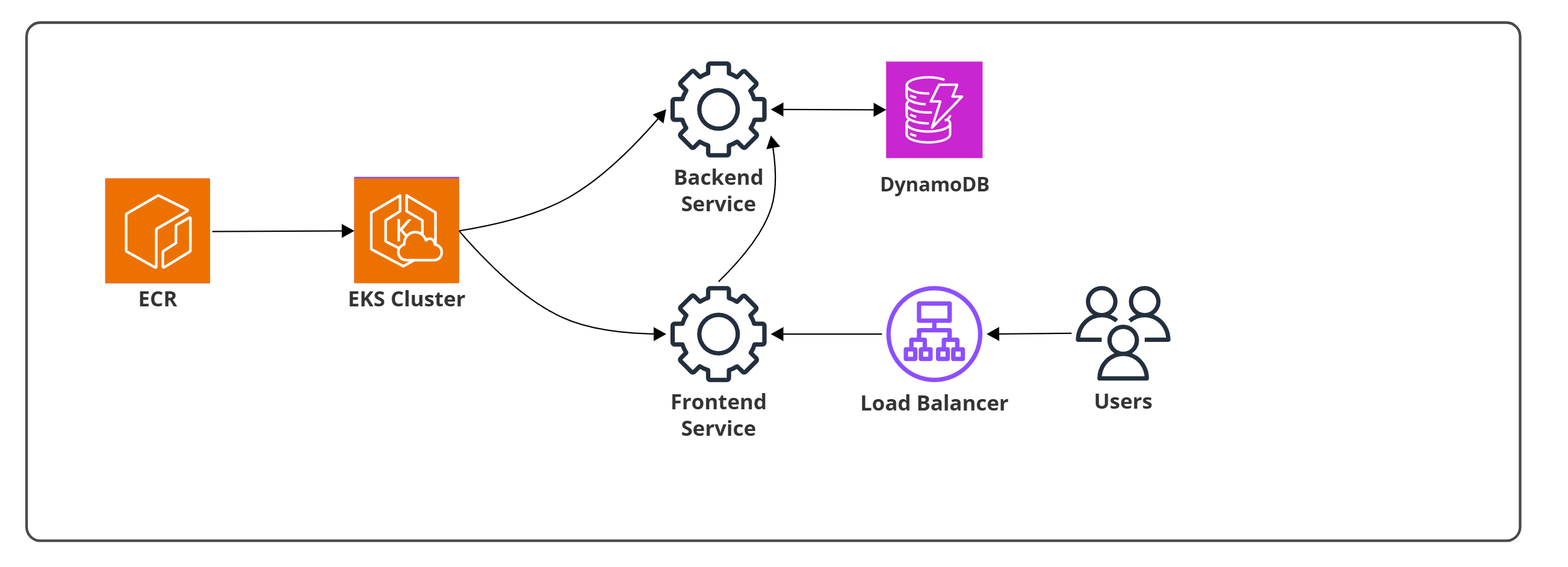

The system consisted of:

- Amazon ECR storing Docker images for backend and frontend

- Amazon EKS cluster running both services as pods

- Backend Service interacting securely with Amazon DynamoDB via IRSA

- Frontend Service exposed through a load balancer to external users

- Internal communication between frontend and backend services

Architecture showing how Amazon ECR stores container images that are deployed to an Amazon EKS cluster. The cluster runs both frontend and backend services: the backend communicates with Amazon DynamoDB for data persistence, while the frontend is exposed through a load balancer that serves external users. The frontend communicates internally with the backend to complete requests.

Installing eksctl and kubectl

I began by installing the Kubernetes command line tools:

- eksctl to create and manage the EKS cluster

- kubectl to interact with the cluster

After setting environment variables for my AWS account and region, I authenticated Docker with ECR and recreated repositories for the application:

corpdirectory/servicecorpdirectory/frontend

Both images were then pushed to ECR for use by the Kubernetes deployments.

IAM Policy for DynamoDB

Since the application depends on an Employees table in DynamoDB, I created a custom IAM policy granting the necessary permissions (GetItem, PutItem, UpdateItem, DeleteItem, Query, and Scan).

I updated the corp-eks-cluster.yaml configuration file to attach this policy to the service account that would be used by the backend pods. This allowed the directory service to interact directly with DynamoDB while following the principle of least privilege.

Updating Kubernetes Manifests

I modified the Kubernetes manifests to reference the correct container images in ECR:

- directory-service/kubernetes/deployment.yaml for the backend

- directory-frontend/kubernetes/deployment.yaml for the frontend

Each manifest was updated with:

- Correct ECR image paths

- Service account binding for DynamoDB access (backend only)

- Container ports for HTTP traffic

Creating the Cluster

I created the EKS cluster using the corp-eks-cluster.yaml configuration. Once the cluster was active, I verified connectivity and applied the backend manifests. The backend service was confirmed by forwarding traffic and successfully querying the /employee endpoint.

Deploying the Frontend

I then deployed the frontend service and accessed it through the external load balancer endpoint once it was provisioned. Logs from the frontend pods confirmed that requests were flowing through to the backend service.

Scaling the Services

I updated both deployment.yaml files to increase the number of replicas for the backend and frontend services. After redeploying, the cluster ran multiple pods behind each service, demonstrating how Kubernetes automatically distributes workloads for scalability and fault tolerance.

Takeaways

This project helped me gain hands-on experience with EKS and Kubernetes on AWS. I learned how to:

- Install and use

eksctlandkubectlfor cluster management - Push Docker images to Amazon ECR and reference them in Kubernetes manifests

- Use IAM roles for service accounts (IRSA) to grant pods DynamoDB access

- Deploy and scale backend and frontend services on an EKS cluster

- Validate deployments through service endpoints and pod logs

This project gave me practical knowledge of running containerized applications on AWS with Kubernetes, connecting Docker-based workflows with cloud-native orchestration.